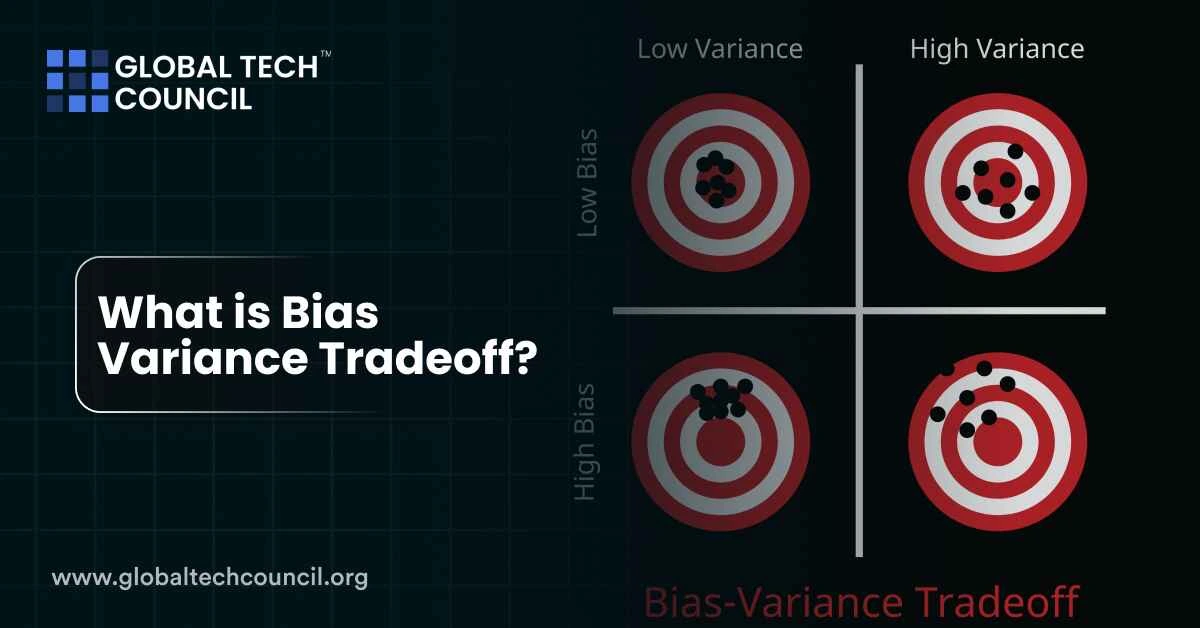

The bias-variance tradeoff explains why machine learning models struggle to get everything right. When you make a model too simple, it makes large mistakes because it can’t capture the full pattern. This is called high bias. But if the model is too complex, it reacts too much to the training data and performs poorly on new data. This is called high variance.

The tradeoff is about finding the right balance. You want a model that learns enough to make accurate predictions but doesn’t overreact to the noise in your data. This simple idea is one of the most important concepts in building any machine learning system.

Why the Bias-Variance Tradeoff Matters

Every model tries to reduce error. But that error has two parts:

- Bias: Happens when the model is too simple. It misses the real signal.

- Variance: Happens when the model is too sensitive. It fits the training data too closely.

Trying to lower one usually increases the other. That’s why there’s a tradeoff. The goal is not to eliminate both, but to minimize total error by choosing the right model complexity.

Understanding Bias and Variance

Let’s break down both types clearly.

Bias

- Comes from incorrect assumptions in the model

- Leads to underfitting

- The model can’t capture complex relationships

Variance

- Comes from model sensitivity to training data

- Leads to overfitting

- The model performs well on training data but poorly on new data

Bias vs Variance

| Concept | Description | Model Behavior | Example Issue |

| Bias | Error from overly simple assumptions | Underfitting | Model misses key trends |

| Variance | Error from too much sensitivity to noise | Overfitting | Model memorizes noise |

The U-Shaped Error Curve

As model complexity increases:

- Bias decreases because the model becomes more flexible

- Variance increases because the model reacts more to fluctuations in the training data

This forms a U-shaped curve when plotting model error. The bottom of the curve is the sweet spot, where total error is minimized. That’s where your model has the best generalization.

Why It’s Still Relevant in 2025

Even with modern tools, the tradeoff is still valid. Deep learning models may seem to challenge this concept because they can be very complex and still generalize well. But recent research shows that this leads to a new pattern called double descent. After the usual U-shaped curve, error drops again at higher complexity.

Still, the basic lesson remains: you have to balance underfitting and overfitting.

Practical Examples

- Linear regression has low variance but high bias when the true pattern is non-linear.

- Decision trees can have low bias but very high variance if not pruned.

- Regularization methods like Lasso and Ridge help control variance without increasing bias too much.

For a deeper dive into how to apply these concepts practically, especially in AI systems, explore this deep tech certification.

Strategies to Manage the Bias-Variance Tradeoff

| Strategy | What It Does | Helps With | Example Use Case |

| Regularization | Adds penalty for complexity | Reduces variance | Lasso, Ridge regression |

| Ensemble methods | Combines multiple models | Reduces variance | Random Forest, Bagging |

| Cross-validation | Validates on unseen data | Balances both | k-fold validation |

| Feature selection | Removes unnecessary inputs | Reduces variance | Improves signal-to-noise |

| Simpler algorithms | Limits model flexibility | Reduces variance | Logistic regression |

Tips for Finding the Right Balance

- Start simple, and add complexity only if needed

- Use cross-validation to check how models perform on new data

- Visualize learning curves to detect underfitting or overfitting

- Regularize if your model performs well on training but poorly on test data

Related Certifications to Explore

If you’re building models and want to avoid common performance pitfalls, the Data Science Certification is a great place to start.

For decision-makers or marketing professionals using AI in business, the Marketing and Business Certification can help you interpret model results more effectively.

Conclusion

The bias-variance tradeoff is one of the core ideas in machine learning. It teaches you that more complexity isn’t always better. Sometimes, simpler models generalize better. Sometimes, they miss the mark.

The key is to find the point where your model is complex enough to learn, but not so complex that it gets confused by noise. With the right tools and understanding, you can make that balance work in your favor.