A form of exploratory data analysis in which observations are divided into different groups with standard features is known as clustering analysis. The purpose of classification or cluster analysis is to ensure that different groups must have different observations as possible. The two main types of classification are K-Means clustering and Hierarchical Clustering. K-Means is used when the number of classes is fixed, while the latter is used for an unknown number of classes. Distance is used to separate observations into different groups in clustering algorithms. Clustering is an essential part of unsupervised machine learning training. This article covers the two broad types of clustering and their differences.

Before digging deeper into clustering, it is essential to go back to your machine learning course and refresh the classification basics.

Introduction to Clustering

Clustering is defined as dividing data points or population into several groups such that similar data points are in the same groups. The aim to segregate groups based on similar traits. Clustering can be divided into two subgroups, broadly:

- Soft Clustering– In this type of clustering, a likelihood or probability of the data point of being in a particular cluster is assigned instead of putting each data point into a separate cluster.

- Hard Clustering– Each data point either entirely belongs to a cluster or not at all.

The task of clustering is subjective; i.e., there are many ways of achieving the goal. There can be many different sets of rules for defining similarity among data points. Out of more than 100 clustering algorithms, a few are used correctly. They are as follows:

- Connectivity models– These models are based on the fact that data points closer in the data space exhibit more similarity than those lying farther away. The model’s first approach can classify data points into separate clusters and aggregation as the distance decreases. Another approach is classifying data points as a single cluster and partitioning as the distance increases. The choice of distance function is subjective. The models are easily interpreted but lack scalability for handling large datasets: example- Hierarchical clustering.

- Centroid models– Iterative clustering algorithms in which similarity is derived as the notion of the closeness of data point to the cluster’s centroid. Example- K-Means clustering. The number of clusters is mentioned in advance, which requires prior knowledge of the dataset.

- Distribution models– The models are based on the likelihood of all data points in the cluster belonging to the same distribution. Overfitting is common in these models. Example- Expectation-maximization algorithm

- Density models– These models search the data space for varying density areas. It isolates various density regions and assigns data points in the same cluster. Example- DBSCAN.

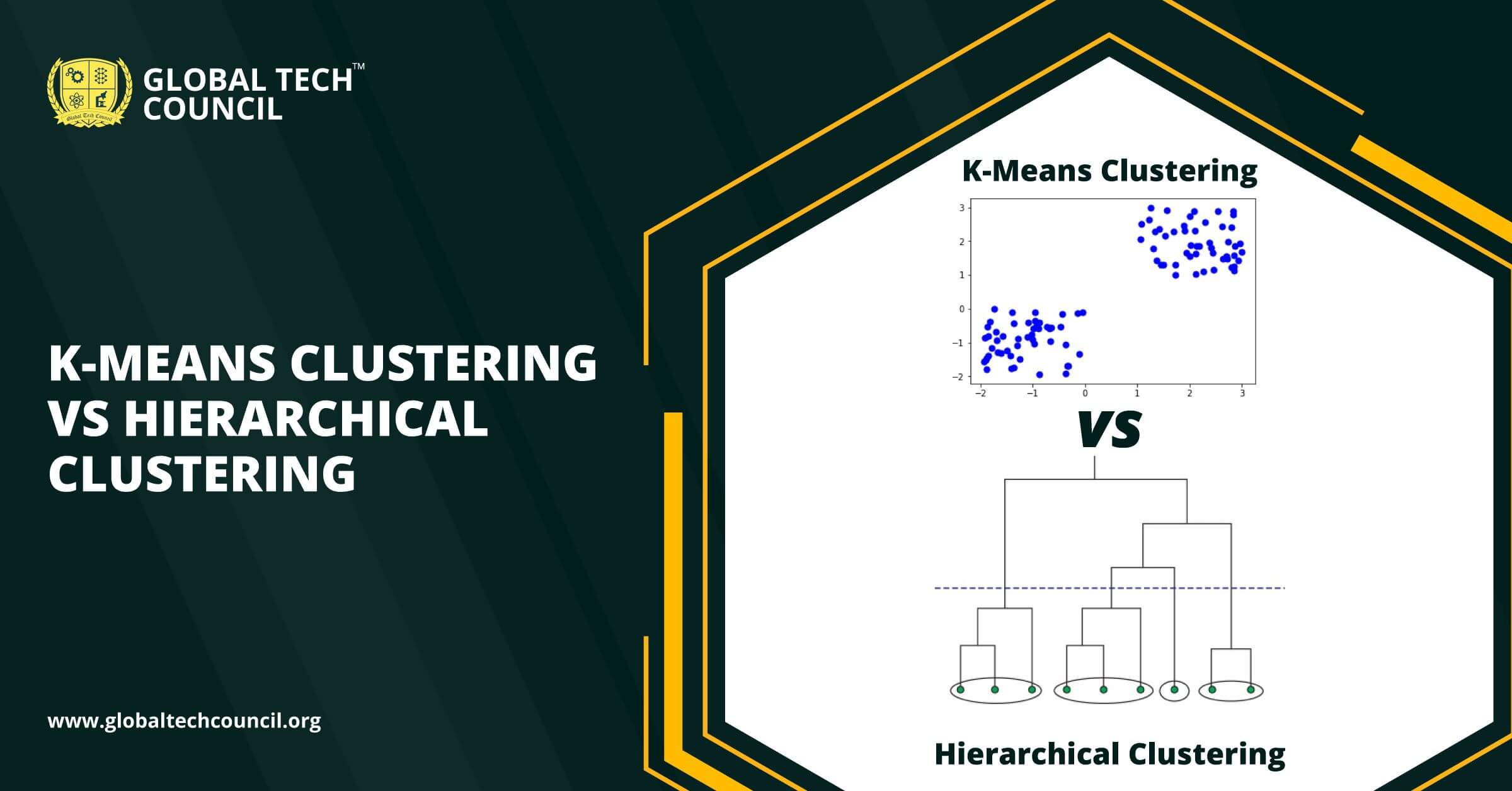

K-Means Clustering

The most common clustering covered in machine learning for beginners is K-Means. The first step is to create c new observations among our unlabelled data and locate them randomly, called centroids. The number of centroids represents the number of output classes. The first step of the iterative process for each centroid is to find the nearest point (in terms of Euclidean distance) and assign them to its category. Next, for each category, the average of all the points attributed to that class is computed. The output is the new centroid of the class.

With every iteration, the observations can be redirected to another centroid. After several reiterations, the centroid’s change in location is less critical as initial random centroids converge with real ones—the process ends when there is no change in centroids’ position. Many methods can be employed for the task, but a common one is ‘elbow method’. A low level of variation is needed within the clusters measured by the within-cluster sum of squares. The number of centroids and observations are inversely proportional. Thus, setting the highest possible number of centroids would be inconsistent.

Hierarchical Clustering

Two techniques are used by this algorithm- Agglomerative and Divisive. In HC, the number of clusters K can be set precisely like in K-means, and n is the number of data points such that n>K. The agglomerative HC starts from n clusters and aggregates data until K clusters are obtained. The divisive starts from only one cluster and then splits depending on similarities until K clusters are obtained. The similarity here is the distance among points, which can be computed in many ways, and it is the crucial element of discrimination. It can be computed with different approaches:

- Min: Given two clusters C1 and C2 such that point a belongs to C1 and b to C2. The similarity between them is equal to the minimum of distance

- Max: The similarity between points a and b is equal to the maximum of distance

- Average: All the pairs of points are taken, and their similarities are computed. Then the average of similarities is the similarity between C1 and C2.

K-Means vs Hierarchical

As we know, clustering is a subjective statistical analysis, and there is more than one appropriate algorithm for every dataset and type of problem. So how to choose between K-means and hierarchical?

- If there is a specific number of clusters in the dataset, but the group they belong to is unknown, choose K-means

- If the distinguishes are based on prior beliefs, hierarchical clustering should be used to know the number of clusters

- With a large number of variables, K-means compute faster

- The result of K-means is unstructured, but that of hierarchal is more interpretable and informative

- It is easier to determine the number of clusters by hierarchical clustering’s dendrogram

Conclusion

The article discussed various ways of performing clustering. The applications of clustering are found in a large number of domains for unsupervised learning. Clustering can also be used to improve the accuracy of the supervised machine learning algorithm. Although it is easy to implement, some critical aspects needed to be taken care of like treating outliers in the data and ensuring that each cluster has a sufficient population. All this is covered as a part of the machine learning certification. Sign up to learn more.

Leave a Reply